Fastai with Wandb

Contents

Fastai with Wandb#

%load_ext autoreload

%autoreload 2

Imports#

from fastai.vision.all import *

from fastai.vision.widgets import *

from aiking.data.external import *

from fastai.callback.wandb import *

import wandb

?wandb.init

Signature:

wandb.init(

job_type: Union[str, NoneType] = None,

dir=None,

config: Union[Dict, str, NoneType] = None,

project: Union[str, NoneType] = None,

entity: Union[str, NoneType] = None,

reinit: bool = None,

tags: Union[Sequence, NoneType] = None,

group: Union[str, NoneType] = None,

name: Union[str, NoneType] = None,

notes: Union[str, NoneType] = None,

magic: Union[dict, str, bool] = None,

config_exclude_keys=None,

config_include_keys=None,

anonymous: Union[str, NoneType] = None,

mode: Union[str, NoneType] = None,

allow_val_change: Union[bool, NoneType] = None,

resume: Union[bool, str, NoneType] = None,

force: Union[bool, NoneType] = None,

tensorboard=None,

sync_tensorboard=None,

monitor_gym=None,

save_code=None,

id=None,

settings: Union[wandb.sdk.wandb_settings.Settings, Dict[str, Any], NoneType] = None,

) -> Union[wandb.sdk.wandb_run.Run, wandb.sdk.lib.disabled.RunDisabled, NoneType]

Docstring:

Start a new tracked run with `wandb.init()`.

In an ML training pipeline, you could add `wandb.init()`

to the beginning of your training script as well as your evaluation

script, and each piece would be tracked as a run in W&B.

`wandb.init()` spawns a new background process to log data to a run, and it

also syncs data to wandb.ai by default so you can see live visualizations.

Call `wandb.init()` to start a run before logging data with `wandb.log()`.

`wandb.init()` returns a run object, and you can also access the run object

with wandb.run.

At the end of your script, we will automatically call `wandb.finish(`) to

finalize and cleanup the run. However, if you call `wandb.init()` from a

child process, you must explicitly call `wandb.finish()` at the end of the

child process.

Arguments:

project: (str, optional) The name of the project where you're sending

the new run. If the project is not specified, the run is put in an

"Uncategorized" project.

entity: (str, optional) An entity is a username or team name where

you're sending runs. This entity must exist before you can send runs

there, so make sure to create your account or team in the UI before

starting to log runs.

If you don't specify an entity, the run will be sent to your default

entity, which is usually your username. Change your default entity

in [Settings](wandb.ai/settings) under "default location to create

new projects".

config: (dict, argparse, absl.flags, str, optional)

This sets wandb.config, a dictionary-like object for saving inputs

to your job, like hyperparameters for a model or settings for a data

preprocessing job. The config will show up in a table in the UI that

you can use to group, filter, and sort runs. Keys should not contain

`.` in their names, and values should be under 10 MB.

If dict, argparse or absl.flags: will load the key value pairs into

the wandb.config object.

If str: will look for a yaml file by that name, and load config from

that file into the wandb.config object.

save_code: (bool, optional) Turn this on to save the main script or

notebook to W&B. This is valuable for improving experiment

reproducibility and to diff code across experiments in the UI. By

default this is off, but you can flip the default behavior to on

in [Settings](wandb.ai/settings).

group: (str, optional) Specify a group to organize individual runs into

a larger experiment. For example, you might be doing cross

validation, or you might have multiple jobs that train and evaluate

a model against different test sets. Group gives you a way to

organize runs together into a larger whole, and you can toggle this

on and off in the UI. For more details, see

[Grouping](docs.wandb.com/library/grouping).

job_type: (str, optional) Specify the type of run, which is useful when

you're grouping runs together into larger experiments using group.

For example, you might have multiple jobs in a group, with job types

like train and eval. Setting this makes it easy to filter and group

similar runs together in the UI so you can compare apples to apples.

tags: (list, optional) A list of strings, which will populate the list

of tags on this run in the UI. Tags are useful for organizing runs

together, or applying temporary labels like "baseline" or

"production". It's easy to add and remove tags in the UI, or filter

down to just runs with a specific tag.

name: (str, optional) A short display name for this run, which is how

you'll identify this run in the UI. By default we generate a random

two-word name that lets you easily cross-reference runs from the

table to charts. Keeping these run names short makes the chart

legends and tables easier to read. If you're looking for a place to

save your hyperparameters, we recommend saving those in config.

notes: (str, optional) A longer description of the run, like a -m commit

message in git. This helps you remember what you were doing when you

ran this run.

dir: (str, optional) An absolute path to a directory where metadata will

be stored. When you call `download()` on an artifact, this is the

directory where downloaded files will be saved. By default this is

the ./wandb directory.

resume: (bool, str, optional) Sets the resuming behavior. Options:

`"allow"`, `"must"`, `"never"`, `"auto"` or `None`. Defaults to `None`.

Cases:

- `None` (default): If the new run has the same ID as a previous run,

this run overwrites that data.

- `"auto"` (or `True`): if the preivous run on this machine crashed,

automatically resume it. Otherwise, start a new run.

- `"allow"`: if id is set with `init(id="UNIQUE_ID")` or

`WANDB_RUN_ID="UNIQUE_ID"` and it is identical to a previous run,

wandb will automatically resume the run with that id. Otherwise,

wandb will start a new run.

- `"never"`: if id is set with `init(id="UNIQUE_ID")` or

`WANDB_RUN_ID="UNIQUE_ID"` and it is identical to a previous run,

wandb will crash.

- `"must"`: if id is set with `init(id="UNIQUE_ID")` or

`WANDB_RUN_ID="UNIQUE_ID"` and it is identical to a previous run,

wandb will automatically resume the run with the id. Otherwise

wandb will crash.

See https://docs.wandb.com/library/advanced/resuming for more.

reinit: (bool, optional) Allow multiple `wandb.init()` calls in the same

process. (default: False)

magic: (bool, dict, or str, optional) The bool controls whether we try to

auto-instrument your script, capturing basic details of your run

without you having to add more wandb code. (default: `False`)

You can also pass a dict, json string, or yaml filename.

config_exclude_keys: (list, optional) string keys to exclude from

`wandb.config`.

config_include_keys: (list, optional) string keys to include in

`wandb.config`.

anonymous: (str, optional) Controls anonymous data logging. Options:

- `"never"` (default): requires you to link your W&B account before

tracking the run so you don't accidentally create an anonymous

run.

- `"allow"`: lets a logged-in user track runs with their account, but

lets someone who is running the script without a W&B account see

the charts in the UI.

- `"must"`: sends the run to an anonymous account instead of to a

signed-up user account.

mode: (str, optional) Can be `"online"`, `"offline"` or `"disabled"`. Defaults to

online.

allow_val_change: (bool, optional) Whether to allow config values to

change after setting the keys once. By default we throw an exception

if a config value is overwritten. If you want to track something

like a varying learning rate at multiple times during training, use

`wandb.log()` instead. (default: `False` in scripts, `True` in Jupyter)

force: (bool, optional) If `True`, this crashes the script if a user isn't

logged in to W&B. If `False`, this will let the script run in offline

mode if a user isn't logged in to W&B. (default: `False`)

sync_tensorboard: (bool, optional) Synchronize wandb logs from tensorboard or

tensorboardX and saves the relevant events file. (default: `False`)

monitor_gym: (bool, optional) Automatically logs videos of environment when

using OpenAI Gym. (default: `False`)

See https://docs.wandb.com/library/integrations/openai-gym

id: (str, optional) A unique ID for this run, used for resuming. It must

be unique in the project, and if you delete a run you can't reuse

the ID. Use the name field for a short descriptive name, or config

for saving hyperparameters to compare across runs. The ID cannot

contain special characters.

See https://docs.wandb.com/library/resuming

Examples:

Basic usage

```

wandb.init()

```

Launch multiple runs from the same script

```

for x in range(10):

with wandb.init(project="my-projo") as run:

for y in range(100):

run.log({"metric": x+y})

```

Raises:

Exception: if problem.

Returns:

A `Run` object.

File: ~/anaconda3/envs/aiking/lib/python3.8/site-packages/wandb/sdk/wandb_init.py

Type: function

c = Config()

c.config_path

Path('/Landmark2/pdo/aiking')

wandb.init(project="Fastai_Artsie", name='Cleaned3', dir=c.config_path)

Finishing last run (ID:1trbkn9d) before initializing another...

Waiting for W&B process to finish, PID 18499

Program ended successfully.

Find user logs for this run at:

/Landmark2/pdo/aiking/wandb/run-20210602_124442-1trbkn9d/logs/debug.logFind internal logs for this run at:

/Landmark2/pdo/aiking/wandb/run-20210602_124442-1trbkn9d/logs/debug-internal.logRun summary:

| epoch | 9 |

| train_loss | 0.12333 |

| raw_loss | 0.00186 |

| wd_0 | 0.01 |

| sqr_mom_0 | 0.99 |

| lr_0 | 0.0 |

| mom_0 | 0.94913 |

| eps_0 | 1e-05 |

| wd_1 | 0.01 |

| sqr_mom_1 | 0.99 |

| lr_1 | 0.0 |

| mom_1 | 0.94913 |

| eps_1 | 1e-05 |

| wd_2 | 0.01 |

| sqr_mom_2 | 0.99 |

| lr_2 | 1e-05 |

| mom_2 | 0.94913 |

| eps_2 | 1e-05 |

| _runtime | 110 |

| _timestamp | 1622637994 |

| _step | 27 |

| valid_loss | 0.26896 |

| accuracy | 0.92593 |

Run history:

| epoch | ▁▁▂▂▂▂▃▃▃▃▄▄▄▄▅▅▅▆▆▆▆▇▇▇▇██ |

| train_loss | ▇█▇▃▄▄▃▃▃▃▃▂▂▂▂▂▂▂▁▁▁▁▁▁▁▁▁ |

| raw_loss | ▆█▄▃▅▃▃▃▂▂▂▁▁▁▁▂▁▁▁▁▁▁▁▁▁▁▁ |

| wd_0 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| sqr_mom_0 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| lr_0 | ▁▄█▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| mom_0 | █▆▃██▇▅▄▂▁▁▁▁▁▂▂▃▃▄▅▅▆▇▇▇██ |

| eps_0 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| wd_1 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| sqr_mom_1 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| lr_1 | ▁▄█▂▂▃▃▄▅▅▆▆▅▅▅▅▄▄▄▃▃▂▂▂▁▁▁ |

| mom_1 | █▆▃██▇▅▄▂▁▁▁▁▁▂▂▃▃▄▅▅▆▇▇▇██ |

| eps_1 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| wd_2 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| sqr_mom_2 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| lr_2 | ▁▄█▂▂▃▃▄▅▅▆▆▅▅▅▅▄▄▄▃▃▂▂▂▁▁▁ |

| mom_2 | █▆▃██▇▅▄▂▁▁▁▁▁▂▂▃▃▄▅▅▆▇▇▇██ |

| eps_2 | ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| _runtime | ▁▁▁▂▂▃▃▃▄▄▄▄▅▅▅▅▅▆▆▆▇▇▇▇███ |

| _timestamp | ▁▁▁▂▂▃▃▃▄▄▄▄▅▅▅▅▅▆▆▆▇▇▇▇███ |

| _step | ▁▁▂▂▂▃▃▃▃▄▄▄▄▅▅▅▅▆▆▆▆▇▇▇▇██ |

| valid_loss | █▃▁▁▁▁▁▁▁ |

| accuracy | ▁▄▆██████ |

Synced 6 W&B file(s), 324 media file(s), 2 artifact file(s) and 0 other file(s)

...Successfully finished last run (ID:1trbkn9d). Initializing new run:

Tracking run with wandb version 0.10.31

Syncing run Cleaned3 to Weights & Biases (Documentation).

Project page: https://wandb.ai/rahuketu86/Fastai_Artsie

Run page: https://wandb.ai/rahuketu86/Fastai_Artsie/runs/6u8vvfk8

Run data is saved locally in

Syncing run Cleaned3 to Weights & Biases (Documentation).

Project page: https://wandb.ai/rahuketu86/Fastai_Artsie

Run page: https://wandb.ai/rahuketu86/Fastai_Artsie/runs/6u8vvfk8

Run data is saved locally in

/Landmark2/pdo/aiking/wandb/run-20210602_124640-6u8vvfk8Run(6u8vvfk8)

# #Set from env variable

# os.environ['WANDB_DIR']

Constructing Dataset#

path = get_ds("Artsie");path

# clstypes = ["Impressionism", "Cubism"]

# dest = "Artsie"

# path = construct_image_dataset(clstypes, dest, key=os.getenv("BING_KEY"), loc=None, count=150, engine='bing'); path

Path('/Landmark2/pdo/aiking/data/Artsie')

(path/"Impressionism").ls()

(#148) [Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000082.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000085.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000054.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000102.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000021.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000105.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000014.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000068.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000130.jpg'),Path('/Landmark2/pdo/aiking/data/Artsie/Impressionism/00000137.jpg')...]

Define DataBlock#

artsie = DataBlock(

blocks = (ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=parent_label,

item_tfms=Resize(128)

); artsie

<fastai.data.block.DataBlock at 0x7f27243252e0>

dls = artsie.dataloaders(path); dls

<fastai.data.core.DataLoaders at 0x7f290adc1a60>

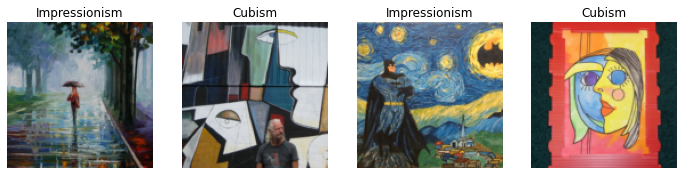

dls.valid.show_batch(max_n=4, nrows=1)

Training and Finetuning with Wandb Tracking#

# c = Config()

model_fname = c.model_path/'artsie'

?SaveModelCallback

Init signature:

SaveModelCallback(

monitor='valid_loss',

comp=None,

min_delta=0.0,

fname='model',

every_epoch=False,

at_end=False,

with_opt=False,

reset_on_fit=True,

)

Docstring: A `TrackerCallback` that saves the model's best during training and loads it at the end.

File: ~/anaconda3/envs/aiking/lib/python3.8/site-packages/fastai/callback/tracker.py

Type: type

Subclasses:

learn = cnn_learner(dls, resnet18, metrics=accuracy, cbs=[WandbCallback(), SaveModelCallback(fname=model_fname)]); learn

<fastai.learner.Learner at 0x7f27245557f0>

learn.fine_tune(8)

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.992697 | 1.714089 | 0.629630 | 00:08 |

Better model found at epoch 0 with valid_loss value: 1.7140886783599854.

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.414968 | 0.824264 | 0.759259 | 00:08 |

| 1 | 0.353526 | 0.540146 | 0.814815 | 00:08 |

| 2 | 0.269805 | 0.260798 | 0.907407 | 00:09 |

| 3 | 0.204155 | 0.218908 | 0.907407 | 00:07 |

| 4 | 0.164788 | 0.163133 | 0.962963 | 00:08 |

| 5 | 0.133795 | 0.152627 | 0.962963 | 00:08 |

| 6 | 0.114421 | 0.143282 | 0.962963 | 00:08 |

| 7 | 0.100710 | 0.136252 | 0.962963 | 00:08 |

Better model found at epoch 0 with valid_loss value: 0.8242642283439636.

Better model found at epoch 1 with valid_loss value: 0.5401461124420166.

Better model found at epoch 2 with valid_loss value: 0.26079821586608887.

Better model found at epoch 3 with valid_loss value: 0.21890847384929657.

Better model found at epoch 4 with valid_loss value: 0.16313259303569794.

Better model found at epoch 5 with valid_loss value: 0.152627095580101.

Better model found at epoch 6 with valid_loss value: 0.14328210055828094.

Better model found at epoch 7 with valid_loss value: 0.13625217974185944.

Interpretation#

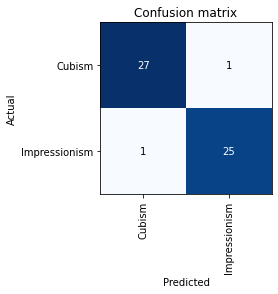

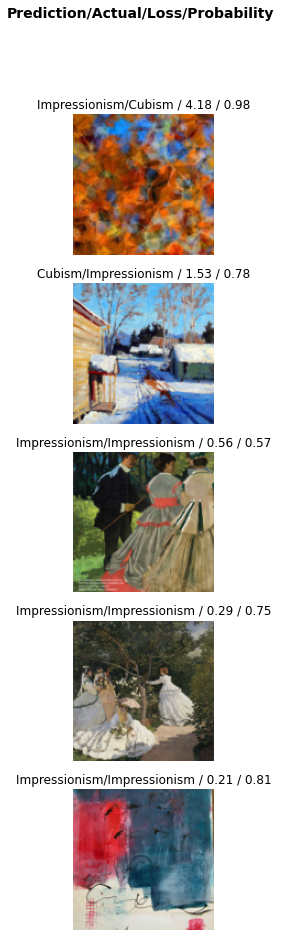

interp = ClassificationInterpretation.from_learner(learn); interp

interp.plot_confusion_matrix()

interp.plot_top_losses(5, nrows=5)

wandb.log_artifact??

Signature:

wandb.log_artifact(

artifact_or_path: Union[wandb.sdk.wandb_artifacts.Artifact, str],

name: Union[str, NoneType] = None,

type: Union[str, NoneType] = None,

aliases: Union[List[str], NoneType] = None,

) -> wandb.sdk.wandb_artifacts.Artifact

Source:

def log_artifact(

self,

artifact_or_path: Union[wandb_artifacts.Artifact, str],

name: Optional[str] = None,

type: Optional[str] = None,

aliases: Optional[List[str]] = None,

) -> wandb_artifacts.Artifact:

""" Declare an artifact as output of a run.

Arguments:

artifact_or_path: (str or Artifact) A path to the contents of this artifact,

can be in the following forms:

- `/local/directory`

- `/local/directory/file.txt`

- `s3://bucket/path`

You can also pass an Artifact object created by calling

`wandb.Artifact`.

name: (str, optional) An artifact name. May be prefixed with entity/project.

Valid names can be in the following forms:

- name:version

- name:alias

- digest

This will default to the basename of the path prepended with the current

run id if not specified.

type: (str) The type of artifact to log, examples include `dataset`, `model`

aliases: (list, optional) Aliases to apply to this artifact,

defaults to `["latest"]`

Returns:

An `Artifact` object.

"""

return self._log_artifact(artifact_or_path, name, type, aliases)

File: ~/anaconda3/envs/aiking/lib/python3.8/site-packages/wandb/sdk/wandb_run.py

Type: method

Model Export#

c.d

{'archive_path': '/Landmark2/pdo/aiking/archive',

'data_path': '/Landmark2/pdo/aiking/data',

'learner_path': '/Landmark2/pdo/aiking/learners',

'model_path': '/Landmark2/pdo/aiking/models',

'storage_path': '/tmp',

'version': 2}

c.learner_path.mkdir(exist_ok=True)

learn.export(c.learner_path/'artsie.pkl')

str((c.learner_path/'artsie.pkl').resolve())

'/Landmark2/pdo/aiking/learners/artsie.pkl'

wandb.log_artifact(str((c.learner_path/'artsie.pkl').resolve()), type='learner')

<wandb.sdk.wandb_artifacts.Artifact at 0x7f27fdad00a0>

!ls -la {path/".."/".."/"learners"}

total 45994

drwxrwxr-x 2 ubuntu ubuntu 3 Jun 2 12:21 .

drwxrwxr-x 7 ubuntu ubuntu 8 Jun 2 12:21 ..

-rw-rw-r-- 1 ubuntu ubuntu 46969490 Jun 2 12:48 artsie.pkl