Digit Cleaner Idea

Contents

Digit Cleaner Idea#

%load_ext autoreload

%autoreload 2

Imports#

import pandas as pd

import numpy as np

import cv2

import matplotlib.pyplot as plt

import itertools

from IPython.display import display, Image

from aiking.data.external import * #We need to import this after fastai modules

from ipywidgets.widgets import interact

import warnings

import os

import dask.bag as db

from fastprogress.fastprogress import master_bar, progress_bar

from matplotlib import cm

import PIL

from dask.diagnostics import ProgressBar

path = untar_data("kaggle_competitions::ultra-mnist"); path

(path/"train").ls()[0]

Path('/Landmark2/pdo/aiking/data/ultra-mnist/train/ypuccwrtnt.jpeg')

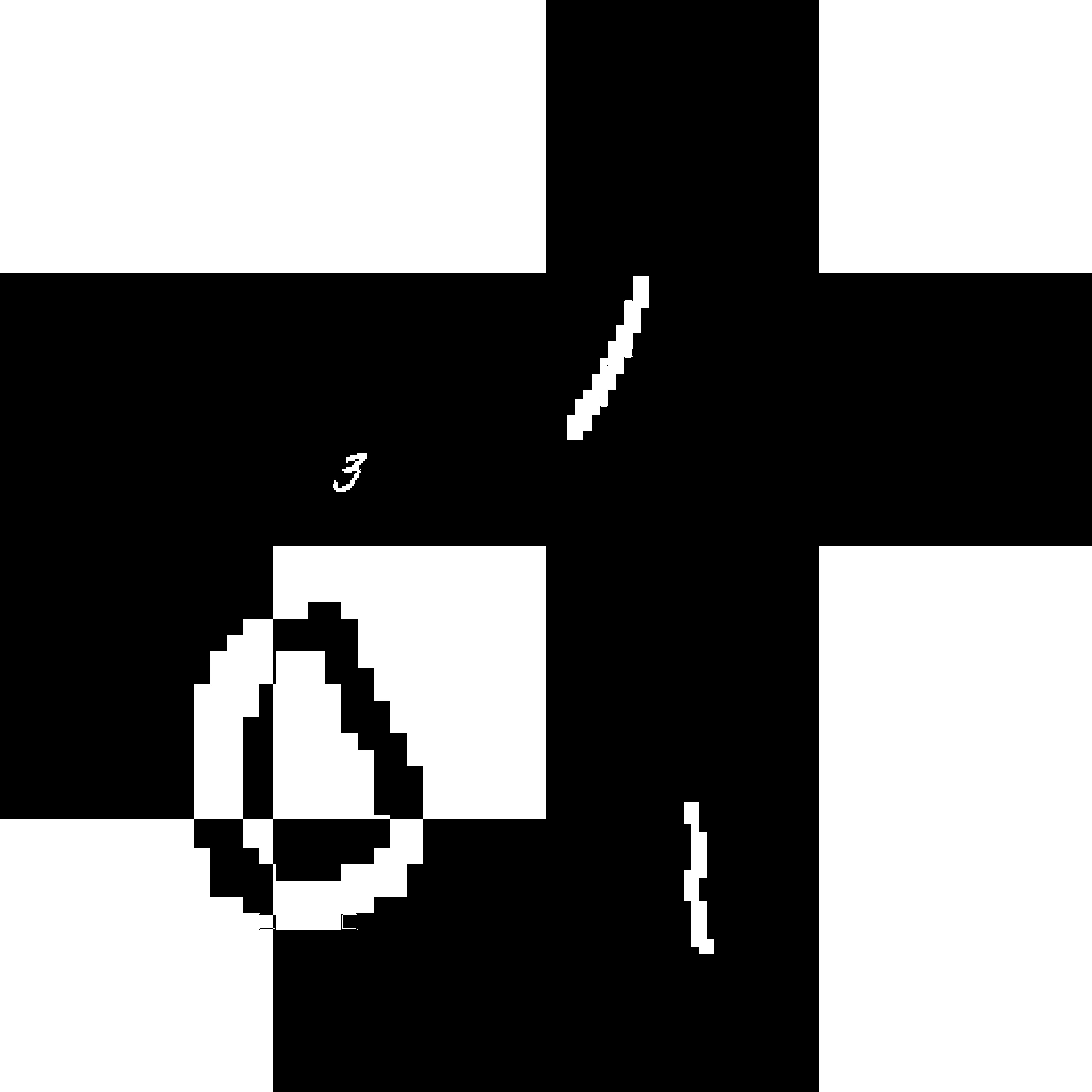

Image((path/"train").ls()[0])

images = (path/"train").ls()

df_train = pd.read_csv(path/"train.csv")

@interact(idx=(0, len(images)))

def display_img(idx):

img_loc = images[idx]

img_name = os.path.splitext(img_loc.name)[0]

print(df_train[df_train['id'] == img_name]['digit_sum'].values)

img1 = cv2.imread(str(img_loc.resolve()), cv2.IMREAD_GRAYSCALE)

img2 = img1/255

Hori = np.concatenate((img1, img2), axis=1)

plt.imshow(Hori, cmap='gray')

# return cv2.imread(img_loc)

# return display(Image(img_loc, width=200, height=200), Image(img_loc, width=200, height=200))

cv2.imread(str(images[0].resolve()), cv2.IMREAD_GRAYSCALE)

array([[255, 255, 255, ..., 255, 255, 255],

[255, 255, 255, ..., 255, 255, 255],

[255, 255, 255, ..., 255, 255, 255],

...,

[255, 255, 255, ..., 255, 255, 255],

[255, 255, 255, ..., 255, 255, 255],

[255, 255, 255, ..., 255, 255, 255]], dtype=uint8)

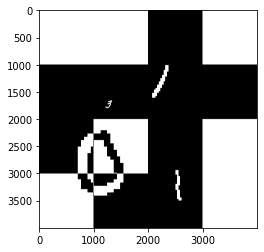

img1 = cv2.imread(str(images[0].resolve()), cv2.IMREAD_GRAYSCALE)

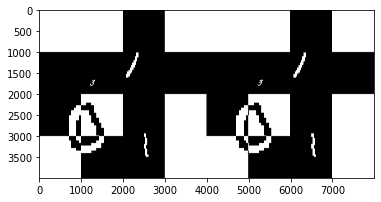

Hori = np.concatenate((img1, img1), axis=1)

plt.imshow(Hori, cmap='gray')

<matplotlib.image.AxesImage at 0x11333050ed30>

img = cv2.imread(str(images[0].resolve()), cv2.IMREAD_GRAYSCALE)

img = img/255

plt.imshow(img, cmap='gray')

<matplotlib.image.AxesImage at 0x1133304858e0>

for i in range(4):

for j in range(4):

print(1000*i, 1000*(i+1), 1000*j, 1000*(j+1))

print(np.mean(img[1000*i:1000*(i+1),1000*j:1000*(j+1)]))# >0.5

# print(i,j)

0 1000 0 1000

1.0

0 1000 1000 2000

1.0

0 1000 2000 3000

0.0

0 1000 3000 4000

1.0

1000 2000 0 1000

0.0

1000 2000 1000 2000

0.005666682352941176

1000 2000 2000 3000

0.0484751725490196

1000 2000 3000 4000

0.0

2000 3000 0 1000

0.1405221647058823

2000 3000 1000 2000

0.8555160588235289

2000 3000 2000 3000

0.003584

2000 3000 3000 4000

1.0

3000 4000 0 1000

0.9356675725490194

3000 4000 1000 2000

0.10501367450980394

3000 4000 2000 3000

0.02856782352941177

3000 4000 3000 4000

1.0

list(itertools.product(range(4), range(4)))

[(0, 0),

(0, 1),

(0, 2),

(0, 3),

(1, 0),

(1, 1),

(1, 2),

(1, 3),

(2, 0),

(2, 1),

(2, 2),

(2, 3),

(3, 0),

(3, 1),

(3, 2),

(3, 3)]

def image_cleaner(img, threshold=0.5):

img = img/255

for i,j in itertools.product(range(4), range(4)):

x1 = 1000*i

x2 = 1000*(i+1)

y1 = 1000*j

y2 = 1000*(j+1)

if np.mean(img[x1:x2, y1:y2]) > threshold:

img[x1:x2, y1:y2] = np.abs(img[x1:x2, y1:y2] -1)

return img

def image_cleaner2(img, threshold=0.5):

img = img/255

for i,j in itertools.product(range(4), range(4)):

x1 = 1000*i

x2 = 1000*(i+1)

y1 = 1000*j

y2 = 1000*(j+1)

max_xy = 4000

border = img[x1:x2,min(y1,max_xy -1)].sum() + img[x1:x2, y2-1].sum() + img[min(x1, max_xy-1), y1:y2].sum() + img[x2-1, y1:y2].sum()

if border >=2000: img[x1:x2, y1:y2] = np.abs(img[x1:x2, y1:y2] -1)

return img

# img = cv2.imread(str(images[0].resolve()), cv2.IMREAD_GRAYSCALE)

# # plt.imshow(image_cleaner(img), cmap='gray')

# i, j = 2,2

# img = img/255

# x1 = 1000*i

# x2 = 1000*(i+1)

# y1 = 1000*j

# y2 = 1000*(j+1)

# max_xy = 4000

# border = img[x1:x2,min(y1,max_xy -1)].sum() + img[x1:x2, y2-1].sum() + img[min(x1, max_xy-1), y1:y2].sum() + img[x2-1, y1:y2].sum()

# if border >=2000: img[x1:x2, y1:y2] = np.abs(img[x1:x2, y1:y2] -1)

# print(border)

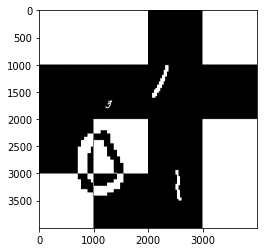

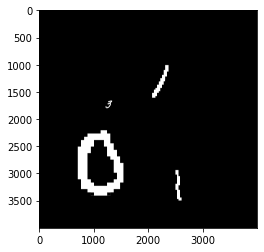

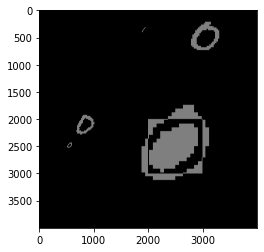

img = cv2.imread(str(images[0].resolve()), cv2.IMREAD_GRAYSCALE)

plt.imshow(image_cleaner2(img), cmap='gray')

<matplotlib.image.AxesImage at 0x11331fdb3e80>

plt.imshow(img, cmap='gray')

<matplotlib.image.AxesImage at 0x66ace94cdc0>

images = (path/"train").ls()

df_train = pd.read_csv(path/"train.csv")

@interact(idx=(0, len(images)), continuous_update=False)

def display_img(idx):

img_loc = images[idx]

img_name = os.path.splitext(img_loc.name)[0]

print(df_train[df_train['id'] == img_name]['digit_sum'].values)

img1 = cv2.imread(str(img_loc.resolve()), cv2.IMREAD_GRAYSCALE)

Hori = np.concatenate((img1/255, image_cleaner(img1), image_cleaner2(img1)), axis=1)

plt.figure(figsize = (30,10))

plt.imshow(Hori, cmap='gray')

path.ls()

(#9) [Path('/Landmark2/pdo/aiking/data/ultra-mnist/test_black'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train_train.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/test'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/valid_train.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/ultra-mnist.zip'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/sample_submission.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train_black')]

pb_train = (path/"train_black")

pb_test = (path/"test_black")

pb_train.mkdir(exist_ok=True)

pb_test.mkdir(exist_ok=True)

for img in (path/"train").ls()[:1]:

data = image_cleaner2(cv2.imread(str(img.resolve()), cv2.IMREAD_GRAYSCALE))

cv2.imwrite(str(pb_train/img.name), data)

def create_clean_ds(folder="train"):

pb_folder = path/f"{folder}_black"

for img in progress_bar((path/folder).ls()):

data = image_cleaner2(cv2.imread(str(img.resolve()), cv2.IMREAD_GRAYSCALE))

data = cv2.normalize(data, None, alpha = 0, beta = 255, norm_type = cv2.NORM_MINMAX, dtype = cv2.CV_32F).astype(np.uint8)

cv2.imwrite(str(pb_folder/img.name), data)

create_clean_ds()

0.06% [17/28000 00:04<2:11:05]

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

/tmp/ipykernel_3631/51113177.py in <module>

----> 1 create_clean_ds()

/tmp/ipykernel_3631/538686461.py in create_clean_ds(folder)

3 for img in progress_bar((path/folder).ls()):

4 data = image_cleaner2(cv2.imread(str(img.resolve()), cv2.IMREAD_GRAYSCALE))

----> 5 cv2.imwrite(str(pb_folder/img.name), data)

KeyboardInterrupt:

pb_train = (path/"train_black")

pb_test = (path/"test_black")

pb_train.mkdir(exist_ok=True)

pb_test.mkdir(exist_ok=True)

def create_clean_ds2(folder="train"):

pb_folder = path/f"{folder}_black"

def convert_image(img):

if not (pb_folder/img.name).exists():

data = image_cleaner2(cv2.imread(str(img.resolve()), cv2.IMREAD_GRAYSCALE))

data = cv2.normalize(data, None, alpha = 0, beta = 255, norm_type = cv2.NORM_MINMAX, dtype = cv2.CV_32F).astype(np.uint8)

cv2.imwrite(str(pb_folder/img.name), data)

return True

res = db.from_sequence((path/folder).ls()).map(convert_image)

with ProgressBar():

res.compute()

create_clean_ds2(folder="train")

[########################################] | 100% Completed | 1hr 30min 26.4s

# !mv {path}/"test_black" {path}/"test_black2"

path.ls()

(#12) [Path('/Landmark2/pdo/aiking/data/ultra-mnist/train_sample.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/sample.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train_train.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/test'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/train_black2'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/valid_train.csv'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/ultra-mnist.zip'),Path('/Landmark2/pdo/aiking/data/ultra-mnist/sample_submission.csv')...]

img = PIL.Image.open(str((path/"train_black2").ls()[2800])).convert("L")

imgarr = np.array(img)

img = cv2.imread(str((path/"train_black2").ls()[2800]))

norm_image = cv2.normalize(img, None, alpha = 0, beta = 255, norm_type = cv2.NORM_MINMAX, dtype = cv2.CV_32F)

norm_image = norm_image.astype(np.uint8)

plt.imshow(norm_image)

<matplotlib.image.AxesImage at 0x11331d79b430>

img2 = cv2.imread(str((path/"train").ls()[2800]))

img2

array([[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[255, 255, 255],

[255, 255, 255],

[255, 255, 255]],

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[255, 255, 255],

[255, 255, 255],

[255, 255, 255]],

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[255, 255, 255],

[255, 255, 255],

[255, 255, 255]],

...,

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]]], dtype=uint8)

def create_norm_ds(inp_folder="train_black2", out_folder="train_black"):

def norm_img(img_loc):

if not (path/out_folder/img_loc.name).exists():

img = cv2.imread(str(path/inp_folder/img_loc.name))

norm_image = cv2.normalize(img, None, alpha = 0, beta = 255, norm_type = cv2.NORM_MINMAX, dtype = cv2.CV_32F)

norm_image = norm_image.astype(np.uint8)

cv2.imwrite(str(path/out_folder/img_loc.name), norm_image)

return True

res = db.from_sequence((path/inp_folder).ls()).map(norm_img)

with ProgressBar():

res.compute()

create_norm_ds()

[ ] | 0% Completed | 1min 37.0s

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

/opt/anaconda/envs/aiking/lib/python3.9/site-packages/dask/multiprocessing.py in get(dsk, keys, num_workers, func_loads, func_dumps, optimize_graph, pool, chunksize, **kwargs)

218 # Run

--> 219 result = get_async(

220 pool.submit,

/opt/anaconda/envs/aiking/lib/python3.9/site-packages/dask/local.py in get_async(submit, num_workers, dsk, result, cache, get_id, rerun_exceptions_locally, pack_exception, raise_exception, callbacks, dumps, loads, chunksize, **kwargs)

495 fire_tasks(chunksize)

--> 496 for key, res_info, failed in queue_get(queue).result():

497 if failed:

/opt/anaconda/envs/aiking/lib/python3.9/site-packages/dask/local.py in queue_get(q)

133 def queue_get(q):

--> 134 return q.get()

135

/opt/anaconda/envs/aiking/lib/python3.9/queue.py in get(self, block, timeout)

170 while not self._qsize():

--> 171 self.not_empty.wait()

172 elif timeout < 0:

/opt/anaconda/envs/aiking/lib/python3.9/threading.py in wait(self, timeout)

311 if timeout is None:

--> 312 waiter.acquire()

313 gotit = True

KeyboardInterrupt:

During handling of the above exception, another exception occurred:

KeyboardInterrupt Traceback (most recent call last)

/tmp/ipykernel_3631/992931216.py in <module>

----> 1 create_norm_ds()

/tmp/ipykernel_3631/4181445123.py in create_norm_ds(inp_folder, out_folder)

9 res = db.from_sequence((path/inp_folder).ls()).map(norm_img)

10 with ProgressBar():

---> 11 res.compute()

/opt/anaconda/envs/aiking/lib/python3.9/site-packages/dask/base.py in compute(self, **kwargs)

286 dask.base.compute

287 """

--> 288 (result,) = compute(self, traverse=False, **kwargs)

289 return result

290

/opt/anaconda/envs/aiking/lib/python3.9/site-packages/dask/base.py in compute(traverse, optimize_graph, scheduler, get, *args, **kwargs)

569 postcomputes.append(x.__dask_postcompute__())

570

--> 571 results = schedule(dsk, keys, **kwargs)

572 return repack([f(r, *a) for r, (f, a) in zip(results, postcomputes)])

573

/opt/anaconda/envs/aiking/lib/python3.9/site-packages/dask/multiprocessing.py in get(dsk, keys, num_workers, func_loads, func_dumps, optimize_graph, pool, chunksize, **kwargs)

232 finally:

233 if cleanup:

--> 234 pool.shutdown()

235 return result

236

/opt/anaconda/envs/aiking/lib/python3.9/concurrent/futures/process.py in shutdown(self, wait, cancel_futures)

738

739 if self._executor_manager_thread is not None and wait:

--> 740 self._executor_manager_thread.join()

741 # To reduce the risk of opening too many files, remove references to

742 # objects that use file descriptors.

/opt/anaconda/envs/aiking/lib/python3.9/threading.py in join(self, timeout)

1051

1052 if timeout is None:

-> 1053 self._wait_for_tstate_lock()

1054 else:

1055 # the behavior of a negative timeout isn't documented, but

/opt/anaconda/envs/aiking/lib/python3.9/threading.py in _wait_for_tstate_lock(self, block, timeout)

1067 if lock is None: # already determined that the C code is done

1068 assert self._is_stopped

-> 1069 elif lock.acquire(block, timeout):

1070 lock.release()

1071 self._stop()

KeyboardInterrupt: