FastAI2021-Intro

Contents

FastAI2021-Intro#

%load_ext autoreload

%autoreload 2

Vision Example#

from aiking.visuals import make_flowchart

blocks = ['Imports', 'Data Download/Engineering','Create Dataloaders', 'Create Learner', 'Training & Fine Tuning']

layers = ['from fastai.vision.all import *\n\

from fastai.vision.widgets import *\n\

from aiking.data.external import *',

'untar_data \n url => path',

'ImageDataLoaders.from_name_func \n (path, get_image_files, valid_pct, label_func, item_tfms) => Dataloaders',

'cnn_learner \n (Dataloaders, architecture, metrics) => learner',

'learner.fine_tune \n (epochs) \n Prints Table']

make_flowchart(blocks, layers)

Imports#

from fastai.vision.all import *

from fastai.vision.widgets import *

from fastai.text.all import *

from fastai.tabular.all import *

from fastai.collab import *

from aiking.data.external import * #We need to import this after fastai modules

from fastdownload import FastDownload

2022-03-11 09:50:05.298431: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-03-11 09:50:05.298470: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

Data Download and Engineering#

URLs.PETS

'https://s3.amazonaws.com/fast-ai-imageclas/oxford-iiit-pet.tgz'

os.environ['AIKING_HOME']

'/Landmark2/pdo/aiking'

d = FastDownload(base=os.environ['AIKING_HOME'], archive='archive', data='data')

d.get(URLs.PETS)

Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet')

untar_data(URLs.PETS)

Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet')

path = untar_data(URLs.PETS)/"images"; path

Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images')

# !rm -rf /content/drive/MyDrive/PPV/S_Personal_Study/aiking/archive

# !rm -rf /content/drive/MyDrive/PPV/S_Personal_Study/aiking/archive/oxford-iiit-pet.tgz

list_ds()

(#14) ['oxford-iiit-pet','DoppelGanger','mnist_sample','Bears','camvid_tiny','imdb','california-housing-prices','adult_sample','imdb_tok','ultra-mnist'...]

path.ls()

(#7393) [Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_124.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/miniature_pinscher_195.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/British_Shorthair_54.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Persian_60.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/american_pit_bull_terrier_161.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/great_pyrenees_174.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Ragdoll_197.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/saint_bernard_65.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/pug_99.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_40.jpg')...]

Create Dataloaders#

doc(get_image_files)

get_image_files[source]

get_image_files(path,recurse=True,folders=None)

Get image files in path recursively, only in folders, if specified.

def is_cat(x): return x[0].isupper()

imgname = path.ls()[0]

print(imgname,"|||", imgname.parts[-1])

imgname.parts[-1][0].isupper()

/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_124.jpg ||| Russian_Blue_124.jpg

True

get_image_files(path), path.ls()

((#7390) [Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_124.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/miniature_pinscher_195.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/British_Shorthair_54.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Persian_60.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/american_pit_bull_terrier_161.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/great_pyrenees_174.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Ragdoll_197.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/saint_bernard_65.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/pug_99.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_40.jpg')...],

(#7393) [Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_124.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/miniature_pinscher_195.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/British_Shorthair_54.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Persian_60.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/american_pit_bull_terrier_161.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/great_pyrenees_174.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Ragdoll_197.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/saint_bernard_65.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/pug_99.jpg'),Path('/Landmark2/pdo/aiking/data/oxford-iiit-pet/images/Russian_Blue_40.jpg')...])

doc(ImageDataLoaders.from_name_func)

ImageDataLoaders.from_name_func[source]

ImageDataLoaders.from_name_func(path,fnames,label_func,valid_pct=0.2,seed=None,item_tfms=None,batch_tfms=None,bs=64,val_bs=None,shuffle=True,device=None)

Create from the name attrs of fnames in paths with label_func

dls = ImageDataLoaders.from_name_func(path,

get_image_files(path),

valid_pct=0.2,

seed=42,

label_func=is_cat,

item_tfms=Resize(224)); dls

<fastai.data.core.DataLoaders at 0x112bba2ef160>

Create Learner#

doc(cnn_learner)

cnn_learner[source]

cnn_learner(dls,arch,normalize=True,n_out=None,pretrained=True,config=None,loss_func=None,opt_func=Adam,lr=0.001,splitter=None,cbs=None,metrics=None,path=None,model_dir='models',wd=None,wd_bn_bias=False,train_bn=True,moms=(0.95, 0.85, 0.95),cut=None,n_in=3,init=kaiming_normal_,custom_head=None,concat_pool=True,lin_ftrs=None,ps=0.5,first_bn=True,bn_final=False,lin_first=False,y_range=None)

Build a convnet style learner from dls and arch

learn = cnn_learner(dls, resnet34, metrics=error_rate); learn

Downloading: "https://download.pytorch.org/models/resnet34-b627a593.pth" to /home/rahul.saraf/.cache/torch/hub/checkpoints/resnet34-b627a593.pth

<fastai.learner.Learner at 0x112bb9e22760>

Training and Fine Tuning#

doc(learn.fine_tune)

Learner.fine_tune[source]

Learner.fine_tune(epochs,base_lr=0.002,freeze_epochs=1,lr_mult=100,pct_start=0.3,div=5.0,lr_max=None,div_final=100000.0,wd=None,moms=None,cbs=None,reset_opt=False)

Fine tune with Learner.freeze for freeze_epochs, then with Learner.unfreeze for epochs, using discriminative LR.

learn.fine_tune(1)

| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 0.176319 | 0.031685 | 0.011502 | 00:54 |

| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 0.064987 | 0.027418 | 0.008796 | 01:09 |

uploader = widgets.FileUpload(); uploader

# !cat ~/anaconda3/envs/aiking/lib/python3.9/site-packages/fastai/vision/all.py

img = PILImage.create(uploader.data[0])

img

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

/tmp/ipykernel_14311/450188109.py in <module>

----> 1 img = PILImage.create(uploader.data[0])

2 img

IndexError: list index out of range

doc(learn.predict)

Learner.predict[source]

Learner.predict(item,rm_type_tfms=None,with_input=False)

Prediction on item, fully decoded, loss function decoded and probabilities

prediction,_, probs = learn.predict(img)

print(f"Is this a cat?: {prediction}.")

print(f"Probability it's a cat: {probs}")

Is this a cat?: True.

Probability it's a cat: tensor([2.8618e-18, 1.0000e+00])

prediction,_, probs = learn.predict(img)

print(f"Is this a cat?: {prediction}.")

print(f"Probability it's a cat: {probs[1].item():.6f}")

Is this a cat?: True.

Probability it's a cat: 1.000000

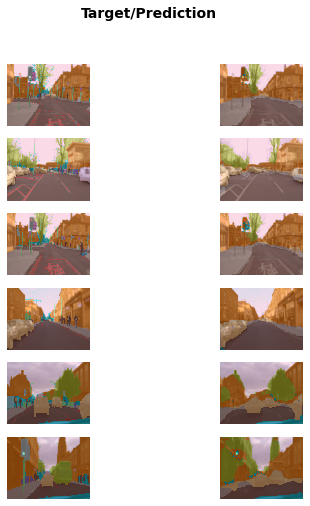

Segmentation Example#

Creating a model that can recognize the content of every individual pixel in an image is called segmentation

path = untar_data(URLs.CAMVID_TINY); path

dls = SegmentationDataLoaders.from_label_func(

path, bs=8, fnames = get_image_files(path/"images"),

label_func = lambda o: path/'labels'/f'{o.stem}_P{o.suffix}',

codes = np.loadtxt(path/'codes.txt', dtype=str)

); dls

learn = unet_learner(dls, resnet34); learn

learn.fine_tune(8)

| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 3.968191 | 2.788000 | 00:09 |

| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 2.070896 | 1.643526 | 00:04 |

| 1 | 1.716042 | 1.232054 | 00:03 |

| 2 | 1.537476 | 1.075623 | 00:03 |

| 3 | 1.413292 | 1.040084 | 00:03 |

| 4 | 1.283577 | 0.900883 | 00:03 |

| 5 | 1.157600 | 0.799863 | 00:03 |

| 6 | 1.050192 | 0.761217 | 00:03 |

| 7 | 0.966571 | 0.760026 | 00:03 |

(path/"labels").ls()[0].stem

'Seq05VD_f02370_P'

np.loadtxt(path/'codes.txt', dtype=str)

array(['Animal', 'Archway', 'Bicyclist', 'Bridge', 'Building', 'Car',

'CartLuggagePram', 'Child', 'Column_Pole', 'Fence', 'LaneMkgsDriv',

'LaneMkgsNonDriv', 'Misc_Text', 'MotorcycleScooter', 'OtherMoving',

'ParkingBlock', 'Pedestrian', 'Road', 'RoadShoulder', 'Sidewalk',

'SignSymbol', 'Sky', 'SUVPickupTruck', 'TrafficCone',

'TrafficLight', 'Train', 'Tree', 'Truck_Bus', 'Tunnel',

'VegetationMisc', 'Void', 'Wall'], dtype='<U17')

learn.show_results(max_n=6, figsize=(7,8))

path

Path('/Landmark2/pdo/aiking/data/camvid_tiny')

Text Example#

dls = TextDataLoaders.from_folder(untar_data(URLs.IMDB), valid='test'); dls

learn = text_classifier_learner(dls, AWD_LSTM, drop_mult=0.5, metrics=accuracy); learn

learn.fine_tune(4, 1e-2)

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.457429 | 0.427618 | 0.803440 | 06:59 |

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.314991 | 0.253363 | 0.898840 | 13:40 |

| 1 | 0.244463 | 0.206381 | 0.919240 | 13:41 |

| 2 | 0.184156 | 0.188984 | 0.927960 | 13:40 |

| 3 | 0.154114 | 0.198980 | 0.927840 | 13:39 |

Tabular Example#

doc(untar_data)

untar_data[source]

untar_data(url,archive=None,data=None,c_key='data',force_download=False)

Download url to fname if dest doesn't exist, and extract to folder dest

path = untar_data(URLs.ADULT_SAMPLE); path.ls()

(#3) [Path('/content/drive/MyDrive/PPV/S_Personal_Study/aiking/data/adult_sample/export.pkl'),Path('/content/drive/MyDrive/PPV/S_Personal_Study/aiking/data/adult_sample/adult.csv'),Path('/content/drive/MyDrive/PPV/S_Personal_Study/aiking/data/adult_sample/models')]

df = pd.read_csv(path/'adult.csv'); df.head()

| age | workclass | fnlwgt | education | education-num | marital-status | occupation | relationship | race | sex | capital-gain | capital-loss | hours-per-week | native-country | salary | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 49 | Private | 101320 | Assoc-acdm | 12.0 | Married-civ-spouse | NaN | Wife | White | Female | 0 | 1902 | 40 | United-States | >=50k |

| 1 | 44 | Private | 236746 | Masters | 14.0 | Divorced | Exec-managerial | Not-in-family | White | Male | 10520 | 0 | 45 | United-States | >=50k |

| 2 | 38 | Private | 96185 | HS-grad | NaN | Divorced | NaN | Unmarried | Black | Female | 0 | 0 | 32 | United-States | <50k |

| 3 | 38 | Self-emp-inc | 112847 | Prof-school | 15.0 | Married-civ-spouse | Prof-specialty | Husband | Asian-Pac-Islander | Male | 0 | 0 | 40 | United-States | >=50k |

| 4 | 42 | Self-emp-not-inc | 82297 | 7th-8th | NaN | Married-civ-spouse | Other-service | Wife | Black | Female | 0 | 0 | 50 | United-States | <50k |

df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 32561 entries, 0 to 32560

Data columns (total 15 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 age 32561 non-null int64

1 workclass 32561 non-null object

2 fnlwgt 32561 non-null int64

3 education 32561 non-null object

4 education-num 32074 non-null float64

5 marital-status 32561 non-null object

6 occupation 32049 non-null object

7 relationship 32561 non-null object

8 race 32561 non-null object

9 sex 32561 non-null object

10 capital-gain 32561 non-null int64

11 capital-loss 32561 non-null int64

12 hours-per-week 32561 non-null int64

13 native-country 32561 non-null object

14 salary 32561 non-null object

dtypes: float64(1), int64(5), object(9)

memory usage: 3.7+ MB

doc(TabularDataLoaders.from_csv)

TabularDataLoaders.from_csv[source]

TabularDataLoaders.from_csv(csv,skipinitialspace=True,path='.',procs=None,cat_names=None,cont_names=None,y_names=None,y_block=None,valid_idx=None,bs=64,shuffle_train=None,shuffle=True,val_shuffle=False,n=None,device=None,drop_last=None,val_bs=None)

Create from csv file in path using procs

dls = TabularDataLoaders.from_csv(path/'adult.csv', path=path, y_names='salary',

cat_name=['workclass','education','marital-status',

'occupation','relationship','race'],

cont_names=['age','fnlwgt','education-num'],

procs = [Categorify, FillMissing, Normalize]

);dls

<fastai.tabular.data.TabularDataLoaders at 0x7f901f566550>

learn = tabular_learner(dls, metrics=accuracy); learn

<fastai.tabular.learner.TabularLearner at 0x7f901f571210>

learn.fit_one_cycle(5)

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.485079 | 0.465481 | 0.767813 | 00:05 |

| 1 | 0.458598 | 0.448505 | 0.780098 | 00:05 |

| 2 | 0.439319 | 0.444874 | 0.787776 | 00:05 |

| 3 | 0.447785 | 0.442088 | 0.787776 | 00:05 |

| 4 | 0.438344 | 0.440791 | 0.787623 | 00:05 |

Colab Example#

path = untar_data(URLs.ML_SAMPLE); path.ls()

(#1) [Path('/content/drive/MyDrive/PPV/S_Personal_Study/aiking/data/movie_lens_sample/ratings.csv')]

doc(CollabDataLoaders.from_csv)

CollabDataLoaders.from_csv[source]

CollabDataLoaders.from_csv(csv,valid_pct=0.2,user_name=None,item_name=None,rating_name=None,seed=None,path='.',bs=64,val_bs=None,shuffle=True,device=None)

Create a DataLoaders suitable for collaborative filtering from csv.

dls = CollabDataLoaders.from_csv(path/'ratings.csv')

learn = collab_learner(dls, y_range=(0.5, 5)) # y_range : Range of y values

learn.fine_tune(10) # without a pretrained model

| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 1.949988 | 1.816280 | 00:00 |

| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 1.752750 | 1.726716 | 00:00 |

| 1 | 1.590970 | 1.451683 | 00:00 |

| 2 | 1.256610 | 0.987660 | 00:00 |

| 3 | 0.925884 | 0.752191 | 00:00 |

| 4 | 0.752691 | 0.684037 | 00:00 |

| 5 | 0.691877 | 0.662923 | 00:00 |

| 6 | 0.663190 | 0.653058 | 00:00 |

| 7 | 0.651265 | 0.648660 | 00:00 |

| 8 | 0.639195 | 0.647364 | 00:00 |

| 9 | 0.628522 | 0.647159 | 00:00 |

learn.show_results()

| userId | movieId | rating | rating_pred | |

|---|---|---|---|---|

| 0 | 53.0 | 22.0 | 3.0 | 3.991087 |

| 1 | 14.0 | 6.0 | 4.0 | 3.812191 |

| 2 | 16.0 | 77.0 | 4.0 | 4.235012 |

| 3 | 94.0 | 89.0 | 4.0 | 3.715150 |

| 4 | 77.0 | 80.0 | 5.0 | 4.053984 |

| 5 | 41.0 | 5.0 | 5.0 | 3.524751 |

| 6 | 18.0 | 62.0 | 4.5 | 3.794177 |

| 7 | 87.0 | 62.0 | 5.0 | 4.103097 |

| 8 | 77.0 | 33.0 | 3.0 | 3.273965 |